Equivalence Relations

Standard post by ancoop on October 31, 2018

Comments are off for this post

[callout headingicon=”noicon” textalign=”textleft” type=”basic”]All men are created equal.

Thomas Jefferson, Declaration of Independence

I don’t hold with equality in all things, just equality before the law, nothing more.

character of Rep. Thaddeus Stevens in the film Lincoln

[/callout]

We ordinarily are completely comfortable writing things like $$\frac{1}{2}=\frac{2}{4}=\frac{500}{1000}$$ The symbols $\frac{3}{2}$ and $\frac{1500}{1000}$, though, are definitely not the same. One of them, for example, has four digits in the denominator, whereas the other has only one digit in the denominator. So in what sense is it the case that these three symbols are the same? Intuitively, they represent the same thing.

Children are usually introduced to fractions as being parts of a whole. What is half a pizza? It’s what happens when you divide one pizza into two parts. Then we think of fractions like $\frac{2}{4}$ as either: one piece, if you divide two pizzas into four parts, or two pieces if you divide one pizza into four parts. But these are manifestly not the same thing! Two halves of a Ming vase are not the same as a whole Ming vase, and if you ordered a pizza at the pizza shop, you’d be very upset when they brought out a pizza in a million little pieces.

Yet we write $\frac{2}{2}=1=\frac{1000000}{1000000}$ and don’t really think about it. We’re choosing to ignore some differences between two things, and treat them like they’re equal. Like Thaddeus Stevens, we are not asserting equality in all things, only equality before the law (of fractions).

An equivalence relation is a way of formalizing this process of picking what to ignore and what to pay attention to.

Equality

The following properties are true for the identity relation $I$ (we usually write $(x,y)\in I$ as $x=y$):

- $I$ is {\em reflexive}: for any object $x$, $x=x$ (or $(x,x)\in I$).

- $I$ is {\em symmetric}: for any objects $x$ and $y$, if $x=y$ then it must be the case that $y=x$.

- $I$ is {\em transitive}: for any objects $x$, $y$, and $z$, if $x=y$ and $y=z$ then it must be the case that $x=z$.

Equality also has the replacement property: if $A=B$, then any occurrence of $A$ can be replaced by $B$ without changing the meaning. So for example, when we write $1+1=2$, we know that $\sin(1+1)=0$ is false, because $\sin(2)=0$ is false.

Notice that Thomas Jefferson’s claim that all men are created equal does not have this property. Michael Jackson is a man, and Bill Nye is a man, so if all men are equal, we should be able to replace any occurrence of “Michael Jackson” with “Bill Nye” without loss of meaning. It’s true that

Michael Jackson is the King of Pop.

But we can’t say that this means

Bill Nye is the King of Pop.

So it seems like we at least have to give up the replacement property, if we want to make sense of notions like equal before the law.

Equivalence

We want to treat different things as though they were the same, so we need the properties of equality. This motivates the following definition:

Definition. A relation $R$ on the set $A$ is an equivalence relation if it is reflexive, symmetric, and transitive, that is, if:

- $\forall x\in A, x\operatorname{R}x$

- $\forall x,y\in A,\left(x\operatorname{R}y\Leftrightarrow y\operatorname{R}x\right)$

- $\forall x,y,z\in A, \left((x\operatorname{R}y\wedge y\operatorname{R}z)\Rightarrow x\operatorname{R}z\right)$

E.g. Equality is an equivalence relation.

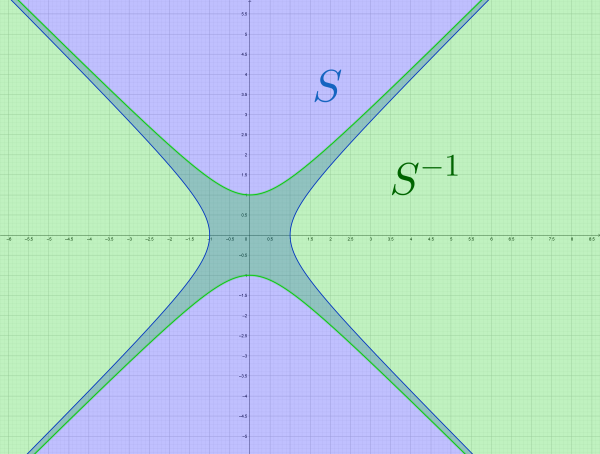

E.g. Consider the relation $R$ on $\mathbb{R}$ given by $x \operatorname{R} y$ iff $x^2=y^2$. We’ll show $R$ is an equivalence relation.

Proof. First show that $R$ is reflexive. Let $x$ be a real number. Then $x^2=x^2$, so $x \operatorname{R}x$.

Now show that $R$ is symmetric. Suppose $x$ and $y$ are real numbers with $x \operatorname{R} y$. Then $x^2=y^2$, so $y^2=x^2$, so $y\operatorname{R} x$.

Finally, show that $R$ is transitive. Suppose $x$, $y$, and $z$ are real numbers with $x\operatorname{R} y$ and $y\operatorname{R}z$. Then $x^2=y^2$ and $y^2=z^2$, so $x^2=z^2$, i.e. $x\operatorname{R} z$. $\Box$

In fact, it’s easy to come up with equivalence relation on any set $A$, given a function $f$: define $x\operatorname{R}_f y$ to mean $f(x)=f(y)$.

E.g. Let $C$ be the relation on $\mathbb{R}\times\mathbb{R}$ given by: $(x,y) \operatorname{C} (z,w)$ iff $x^2+y^2=z^2+w^2$. Then $C$ is an equivalence relation.

E.g. Let $T$ be the relation on $\mathbb{Z}$ given by $x \operatorname{T} y$ if $x$ and $y$ have the same parity (i.e. are either both even or are both odd). Then $T$ is an equivalence relation.

An example from algebra: modular arithmetic

The following generalizes the previous example $T$:

Definition. Let $p$ be an integer. We say $m$ is equal to $n$ modulo $p$ if $m-n$ is a multiple of $p$, i.e. if there is $k\in\mathbb{Z}$ with $m-n=kp$.

Theorem. Equality modulo $p$ is an equivalence relation.

Proof. First we’ll show that equality modulo $p$ is reflexive. Let $m\in \mathbb{Z}$. Then $m-m=0=0\cdot p$, so $m$ is equal to $m$ modulo $p$.

Now we’ll show that equality modulo $p$ is symmetric. Suppose $m$ is equal to $n$ modulo $p$. Then there is some $k$ with $m-n=kp$. So $n-m=(-k)\cdot p$, which shows that $n-m$ is a multiple of $p$, hence $n$ is equal to $m$ modulo $p$.

Now we’ll show that equality modulo $p$ is transitive. Suppose $m$ is equal to $n$ modulo $p$ and $n$ is equal to $\ell$ modulo $p$. Consider $$m-\ell= m-n + n-\ell$$

so $m-\ell$ is the sum of two multiples of $p$, hence a multiple of $p$ itself. Thus $m$ is equal to $\ell$ modulo $p$. $\Box$.

It turns out that the arithmetic of $\mathbb{Z}$–that is, addition, subtraction, and multiplication–makes sense if consider equality-modulo-$p$ instead of ordinary equality. We call this {\em modular arithmetic} and refer to $p$ as the modulus.

The following are standard notations for “$m$ is equal to $n$ modulo $p$”:

\begin{equation*}

\begin{aligned}

m&=n\mod p\\

m&=_pn\\

m&\equiv_pn

\end{aligned}

\end{equation*}

Theorem. If $m\equiv_pn$ and $r\equiv_ps$, then $m+r\equiv_pn+s$ and $mr\equiv_pns$.

Proof. Suppose $m\equiv_pn$ and $r\equiv_ps$. That is, suppose $m-n$ and $r-s$ are multiples of $p$. Then

\begin{equation*}(m+r)-(n+s)=(m-n) + (r-s)\end{equation*}

is the sum of multiples of $p$, hence a multiple of $p$. So $m+r\equiv_pn+s$. Also

\begin{equation*}\begin{aligned}mr-ns&=mr-ms+ms-ns\\

&=m(r-s)+(m-n)s\end{aligned}\end{equation*}

so that $mr-ns$ is the sum of two terms, each of which is a multiple of $p$, hence $mr-ns$ is a multiple of $p$, which is to say $mr\equiv_pns$. $\Box$.

Exercise.Show that if $m\equiv_pn$ and $r\equiv_ps$, then $m-r\equiv_pn-s$.

Exercise. What do symmetry and reflexivity look like in the digraph?

Exercise. What do symmetry and reflexivity look like in the digraph?